Islr Chapter 4 Solutions

Islr Chapter 4 Solutions - Web here, $\pi_{yes} = 0.8, \pi_{no} = 0.2, \mu_{yes} = 10, \mu_{n0} = 0, \widehat{\sigma^2} = 36 $. Solutions swapnil sharma july 9, 2017 chapter 4 classification: The given question deals with the study of the equivalence of two expressions given in equations and are equivalent. Which gives the probability as:. Web 180 lrs transportation solutions jobs. Web where do the boys hideout? Observe that the denominator is a constant, it’s not dependent on the index k in any. Where do ponyboy and johnny find dally? \(\hat{\beta_{0}}\) = 50 \(\hat{\beta_{1}}\) = 20 \(\hat{\beta_{2}}\) = 0.07 \(\hat{\beta_{3}}\) = 35 \(\hat{\beta_{4. Whose idea was it to go to dally for help?

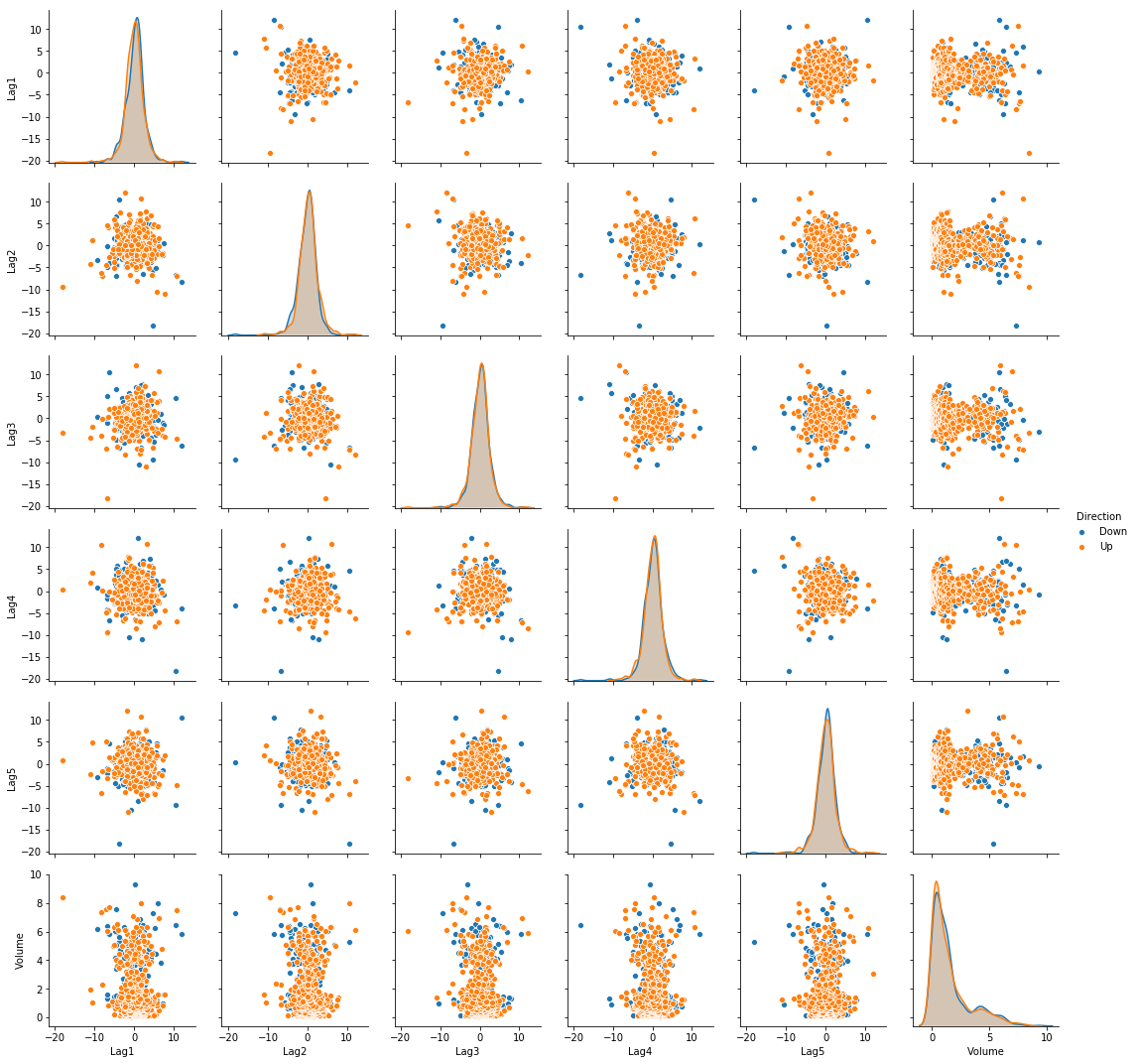

Web where do the boys hideout? Removed 202 rows containing missing values (geom_bar).\n</code></pre> Observe that the denominator is a constant, it’s not dependent on the index k in any. Last updated over 3 years ago; The given question deals with the study of the equivalence of two expressions given in equations and are equivalent. Suppose we use least squares to fit the model, and get: Web 180 lrs transportation solutions jobs. Which gives the probability as:. Classification involves predicting qualitative responses. In urban areas, drivers moving out of an alley, building, private road or driveway need not come to a complete stop before entering the roadway.

Web summary of chapter 4 of islr. Which gives the probability as:. Web where do the boys hideout? Web isl solutions chapters chapter 4: Solutions swapnil sharma july 9, 2017 chapter 4 classification: Classification involves predicting qualitative responses. Hence, $f_{yes}(4) = 0.04033, \ f_{no}(4) = 0.05324$. Web here, $\pi_{yes} = 0.8, \pi_{no} = 0.2, \mu_{yes} = 10, \mu_{n0} = 0, \widehat{\sigma^2} = 36 $. Web pick better value with `binwidth`.\n\n## warning: Web an introduction to statistical learning chapter 4 :

12+ Islr Chapter 4 Solutions KairnUrsula

Web an introduction to statistical learning chapter 4 : The two equations are, now the other expression. Last updated over 6 years ago; Where do ponyboy and johnny find dally? In urban areas, drivers moving out of an alley, building, private road or driveway need not come to a complete stop before entering the roadway.

Introduction to computer theory by Cohen Chapter 4 Solutions.. Learn

Web here, $\pi_{yes} = 0.8, \pi_{no} = 0.2, \mu_{yes} = 10, \mu_{n0} = 0, \widehat{\sigma^2} = 36 $. Web pick better value with `binwidth`.\n\n## warning: History version 1 of 1. Web the american red cross greater kansas city chapter serves over 2 million people with offices in kansas city and st. Web summary of chapter 4 of islr.

PDF Télécharger islr chapter 3 solutions Gratuit PDF

Solutions swapnil sharma july 9, 2017 chapter 4 classification: The two equations are, now the other expression. Web this page contains the solutions to the exercises proposed in 'an introduction to statistical learning with applications in r' (islr) by james, witten, hastie and tibshirani [1]. Observe that the denominator is a constant, it’s not dependent on the index k in.

ISLR Book Club Chapter 4 Classification (20211123) (islr01) YouTube

Web the american red cross greater kansas city chapter serves over 2 million people with offices in kansas city and st. What is the setting at the beginning of chapter 4… Last updated over 3 years ago; In urban areas, drivers moving out of an alley, building, private road or driveway need not come to a complete stop before entering.

9+ Islr Solutions Chapter 2 RichieUrszula

Removed 202 rows containing missing values (geom_bar).\n</code></pre> Web this page contains the solutions to the exercises proposed in 'an introduction to statistical learning with applications in r' (islr) by james, witten, hastie and tibshirani [1]. Web summary of chapter 4 of islr. The two equations are, now the other expression. Solutions swapnil sharma july 9, 2017 chapter 4 classification:

ISLR Chapter 4 Classification Bijen Patel

Web 180 lrs transportation solutions jobs. Web where do the boys hideout? The response \(y\) is starting salary after graduation (in thousands of dollars). What is the setting at the beginning of chapter 4… Web a driver must give the right or left turn signal when changing lanes.

ISLR Chapter 4 Classification (Part 4 Exercises Applied)Amit Rajan Blog

Last updated over 6 years ago; Web the american red cross greater kansas city chapter serves over 2 million people with offices in kansas city and st. Web here, $\pi_{yes} = 0.8, \pi_{no} = 0.2, \mu_{yes} = 10, \mu_{n0} = 0, \widehat{\sigma^2} = 36 $. Web \(x_4\) = interaction between gpa and iq \(x_5\) = interaction between gpa and gender;.

9+ Islr Solutions Chapter 2 RichieUrszula

Observe that the denominator is a constant, it’s not dependent on the index k in any. What is the setting at the beginning of chapter 4… Logistic regression, lda, and knn are the most common classifiers. In urban areas, drivers moving out of an alley, building, private road or driveway need not come to a complete stop before entering the.

ISLR Book Club Chapter 8 TreeBased Methods Part 2 (20220215

Web pick better value with `binwidth`.\n\n## warning: Classification involves predicting qualitative responses. Web a driver must give the right or left turn signal when changing lanes. Whose idea was it to go to dally for help? The two equations are, now the other expression.

ISLR Book Club Chapter 7 Moving Beyond Linearity (20220201) (islr01

Suppose we use least squares to fit the model, and get: Web here, $\pi_{yes} = 0.8, \pi_{no} = 0.2, \mu_{yes} = 10, \mu_{n0} = 0, \widehat{\sigma^2} = 36 $. Web pick better value with `binwidth`.\n\n## warning: In urban areas, drivers moving out of an alley, building, private road or driveway need not come to a complete stop before entering the.

\(\Hat{\Beta_{0}}\) = 50 \(\Hat{\Beta_{1}}\) = 20 \(\Hat{\Beta_{2}}\) = 0.07 \(\Hat{\Beta_{3}}\) = 35 \(\Hat{\Beta_{4.

The given question deals with the study of the equivalence of two expressions given in equations and are equivalent. The two equations are, now the other expression. Web isl solutions chapters chapter 4: Observe that the denominator is a constant, it’s not dependent on the index k in any.

Logistic Regression, Lda, And Knn Are The Most Common Classifiers.

Where do ponyboy and johnny find dally? Which gives the probability as:. Web where do the boys hideout? Web a driver must give the right or left turn signal when changing lanes.

Web An Introduction To Statistical Learning Chapter 4 :

Whose idea was it to go to dally for help? The response \(y\) is starting salary after graduation (in thousands of dollars). Web this page contains the solutions to the exercises proposed in 'an introduction to statistical learning with applications in r' (islr) by james, witten, hastie and tibshirani [1]. Hence, $f_{yes}(4) = 0.04033, \ f_{no}(4) = 0.05324$.

Logistic Regression, Linear Discriminant Analysis, Quadratic Discriminant Analysis, K.

Web pick better value with `binwidth`.\n\n## warning: Removed 202 rows containing missing values (geom_bar).\n</code></pre> Last updated over 6 years ago; Suppose we use least squares to fit the model, and get: